In the crowded arena of SEO, where attention often turns to keywords and backlinks, the technical SEO term ‘crawl budget’ frequently gets lost in the shuffle. But did you know that a poor understanding of crawl budget could sabotage even the most well-crafted SEO strategy?

That’s right: it can make or break how often search engines visit your site, which pages they index, and ultimately, how visible your content is in search results.

Failing to optimize for crawl budget can lead to decreased traffic, less visibility, and lost revenue opportunities. If you’re investing time, effort, and resources into your website, this is a topic you can’t afford to ignore.

In this article, we’ll explain what crawl budget is and why it should command your attention, along with actionable advice on how to optimize your crawl budget effectively.

What is a crawler?

Google may crawl 6 pages on your site daily, 5,000 pages daily, or even 4,000,000 pages daily. The size of your site, the ‘health’ of your site (how many faults Google encounters), and the number of links to your site all influence the number of pages Google crawls.

This figure fluctuates slightly daily, but it is generally consistent.

How does it work?

A crawler, such as Googlebot, is given a list of URLs on a website to crawl. It works its way through the list in a systematic manner. Several things can cause Google to believe that a URL needs to be crawled.

For example, it may have discovered new links referring to material, or it could have been tweeted or updated in the XML sitemap.

It periodically checks your robots.txt file to ensure that it is still permitted to crawl each URL and then crawls the URLs one by one. Once a spider has crawled a URL and analyzed its contents, it adds any new URLs it discovers on that page to its to-do list.

What is a crawl budget?

The site’s crawl budget is the number of pages that Googlebot will crawl on your website during a given period. This number is limited, and thus it’s important to make sure that your most important pages are crawled first and foremost.

Why Is Crawl Budget Important?

Your crawl budget directly impacts how often your website appears in search results. If Googlebot can’t crawl your site because you have a low crawl budget, then your site won’t be indexed as frequently, which will hurt its ranking position over time.

Several factors contribute to your crawl budget, including the size of your website, the number of pages on your website, the number of external links pointing to your website, and how fast your website loads.

How does Google crawl every website?

Google crawls web pages and indexes words and information using bots, sometimes called “Google bots” or spiders. The results are included in the Google index as soon as the tracking is completed, allowing a website to be optimized.

When should you worry about the crawl budget?

Most of the time, a site with fewer than a few thousand URLs will be crawled efficiently, according to Google. Hence, if you work on smaller websites, you may not have to worry about the crawl budget.

However, for a large website that generates pages based on URL parameters, you might want to prioritize actions that help Google figure out what to crawl and when.

What is the crawl rate limit?

The crawl rate limit restricts a site’s maximum fetching pace. It refers to the number of concurrent connections Googlebot can employ to scan the site simultaneously and the time it takes between fetches.

The crawl rate can fluctuate depending on several factors, such as crawl health. This means that if a site responds fast for some time, the limit increases, allowing additional connections to be used to crawl.

Also, the limit is reduced, and Googlebot crawls less if the site slows down or answers with server issues. Moreover, a limit can be set in Search Console to decrease Googlebot’s crawling of the site.

What is crawl demand?

Popularity and stales are the two important elements in influencing crawl demand. The former means that more popular URLs on the Internet are crawled more frequently to keep them fresher in our index. Meanwhile, staleness means systems try to keep URLs in the index from becoming stale.

Googlebot will be inactive if there is no demand from indexing, even if the crawl rate limit is not achieved.

Furthermore, site-wide events such as migrations may raise crawl demand to reindex material under the new URLs. The crawl budget is defined as the number of URLs Googlebot can and wants to crawl when crawl rate and crawl demand are combined.

How URLs affect Google’s algorithm

If a website has many pages and thousands of URLs constantly being formed, the Google algorithm’s attention will be spread out further. Hence, search engines will have an easier time crawling a website with minimal URLs.

Therefore, always remember that the larger a website is and the more pages it has, the more it will be essential to monitor and optimize the crawl frequency of the search engines.

Crawl budget vs. index budget

A crawl budget is not the same as an index budget. The crawl budget refers to the number of URLs that can be indexed. It is not entirely spent if the page cannot be indexed owing to an error message. Meanwhile, the crawl budget is used for each requested page.

Considerations in crawl budget

If the number of pages on your site exceeds the crawl budget, pages will be missed. Likewise, if Google doesn’t index a page, it won’t rank for anything. The vast majority of websites, however, do not require crawl funding since Google is incredibly adept at locating and indexing web pages. However, there are a few situations in which a crawl budget should be considered:

Website size

If your website (such as an eCommerce site) has 10,000 or more pages, Google may have difficulty discovering them all.

Several new pages were added

If you’ve recently added a new section to your site with hundreds of pages, ensure you have enough crawl budget to get them all indexed swiftly.

Several redirects

Your crawl budget is depleted by several redirects and redirects chains.

Crawlers and their significance for SEO

Given the immensity of the internet, crawling the web is a hard and expensive process for search engines. However, here are some pieces of information you should bear in mind.

- The internal linking structure of your site is critical because crawlers use links on websites to find other pages.

- Crawlers prioritize new sites, modifications to existing sites, and broken links.

- Using an automated method, Google determines which sites to crawl, how often, and how many pages to fetch.

- Your hosting capabilities, such as server resources and bandwidth, impact the crawling process.

How to check crawl budget issue

If Google needs to crawl many URLs on your site and has allocated a large number of crawls, the crawl budget is not an issue.

However, suppose your site has 250,000 pages, and Google crawls 2,500 of them daily; it could take up to 200 days for Google to notice specific modifications to your sites. The budget for the crawl is currently an issue. If it crawls 50,000 times every day, on the other hand, there’s no problem.

Follow the methods below to identify if your site has a crawl budget issue rapidly.

- Determine the number of pages on your site; the number of URLs in your XML sitemaps is a good place to start.

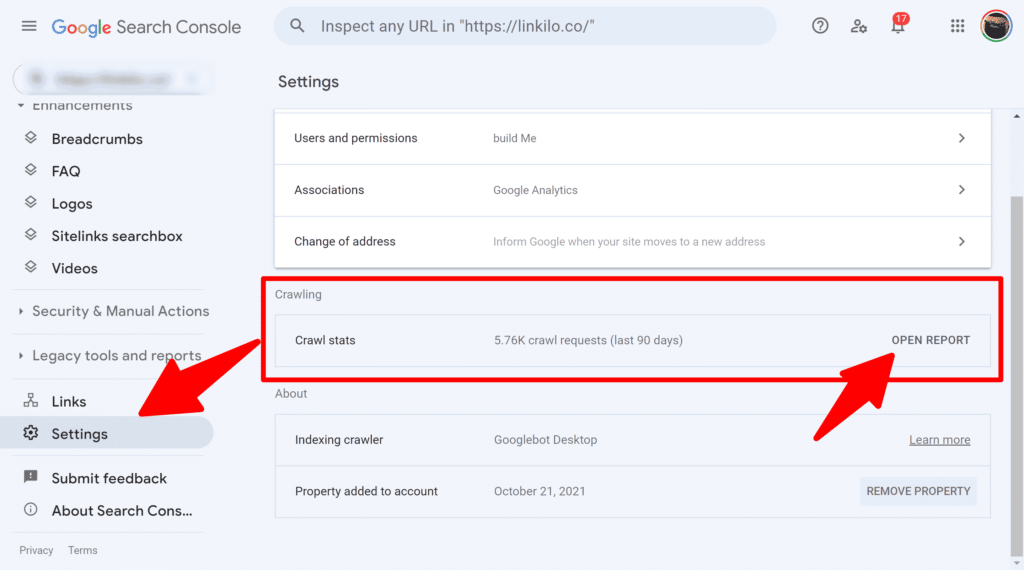

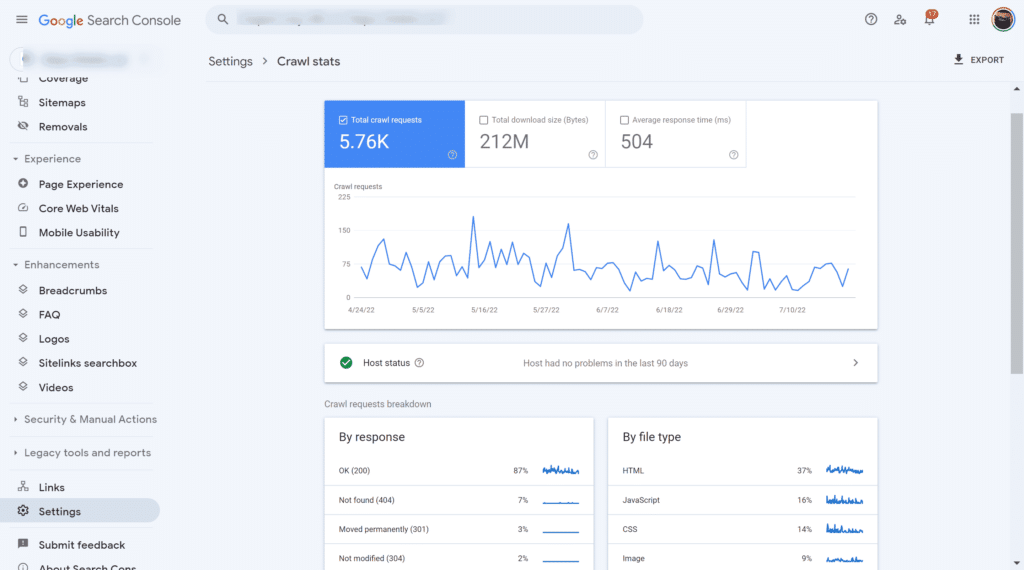

- Navigate to the Google Search Console.

- Go to “Settings” -> “Crawl stats” and “Open Report.”

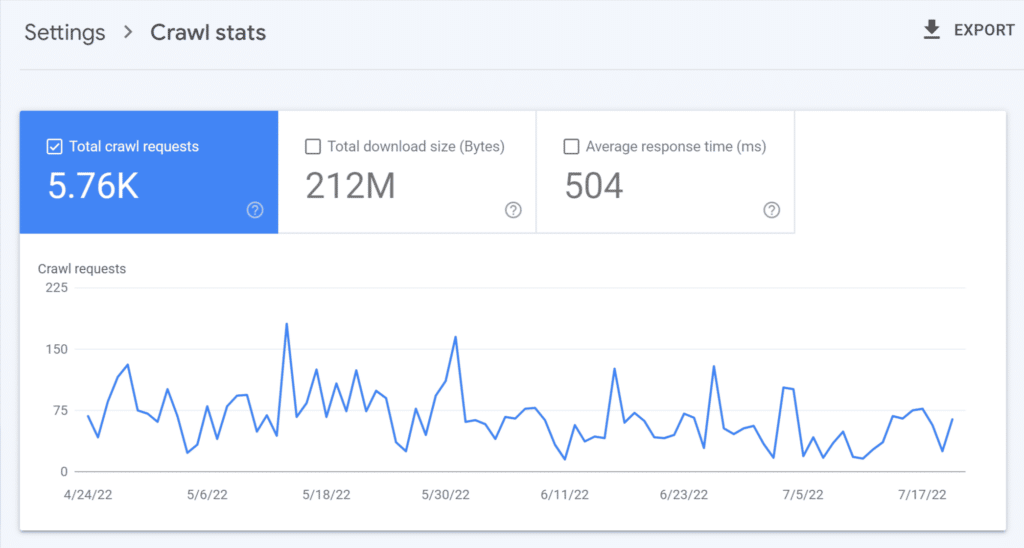

- Note the daily average number of pages crawled.

- Divide the number of pages by the number of “average crawled per day.”

- For values greater than 10, you have 10x the number of pages Google crawls daily. This means you should probably increase your crawl budget. You should read anything else if your number is less than 3.

What affects the crawl budget?

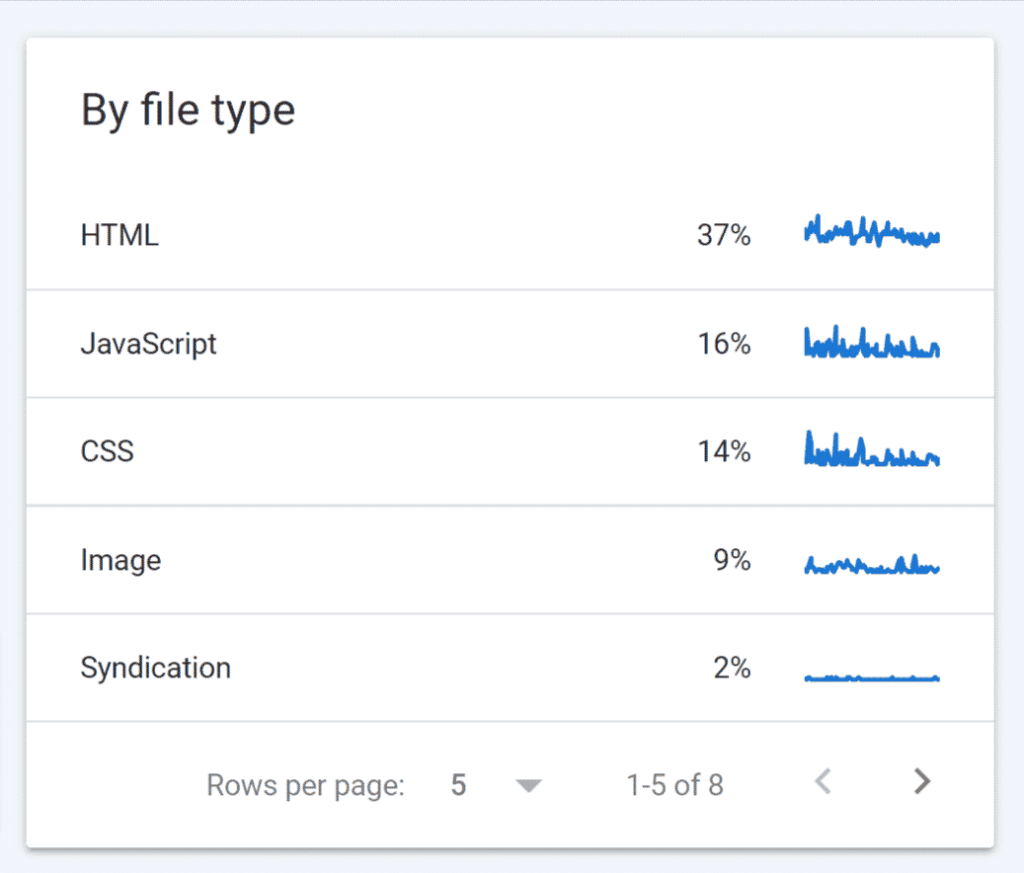

AMP, Hreflang, CSS, and JavaScript queries, such as XHR requests, count towards a site’s crawl. Hence, your crawl budget is affected by all URLs and requests, which can consume a site’s crawl budget.

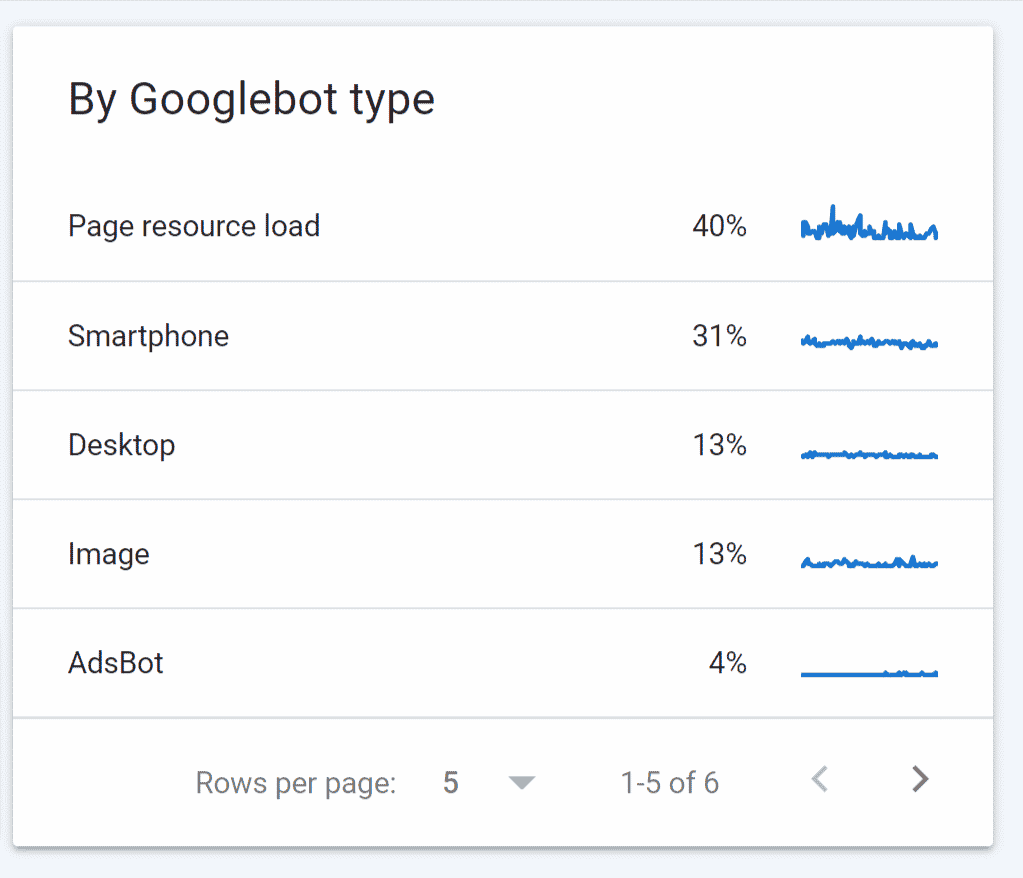

Crawling and parsing pages, sitemaps, RSS feeds, submitting URLs for indexing in Google Search Console, and using the indexing API, can all help you find these URLs. Numerous Google bots also share the crawl budget. The Crawl Stats report in GSC contains a list of the numerous Google bots crawling your page.

Ways to improve crawl budget

Here are some steps to help Google better crawl your site.

Allow robots.txt crawling for your important pages.

You can manually or use the website auditing tool to manage robot.txt. You can also utilize a tool. Importing your robots.txt file into your preferred program will allow you to allow or disallow crawling of any page on your site in seconds. Then upload an amended document. It can be done by hand. However, with a huge website requiring frequent calibrations, it’s much easier to rely on a tool.

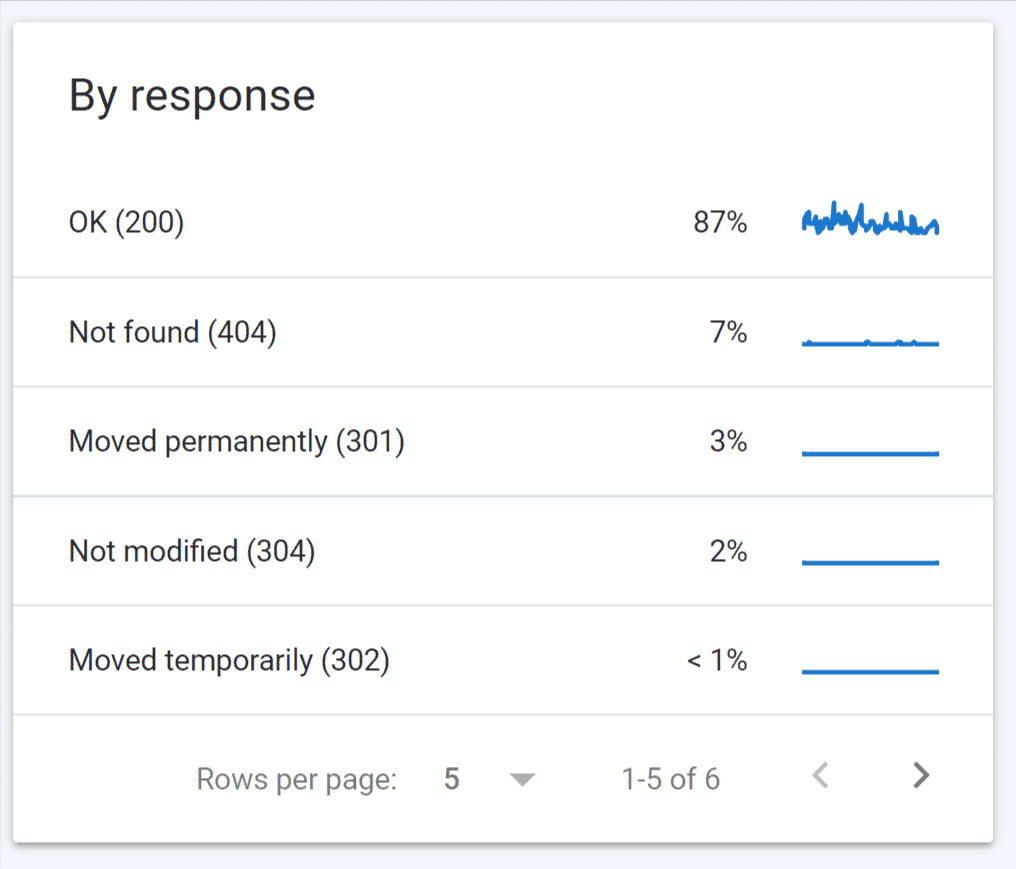

Reduce errors

Making ensuring that the pages that are crawled return one of two possible response codes: 200 (for “OK”) or 301 (for “Go here instead”) is the first step in getting more pages crawled. Only pages that served a 200 will be tracked by Google Analytics and most other analytics programs. Other return codes are not acceptable.

Fixing an error may necessitate rewriting code. You may also need to redirect a URL to another location. You can also try to correct the source if you know what triggered the mistake. Meanwhile, the simplest method is to collect all URLs that did not return 200 or 301 and then sort them by how frequently they were accessed.

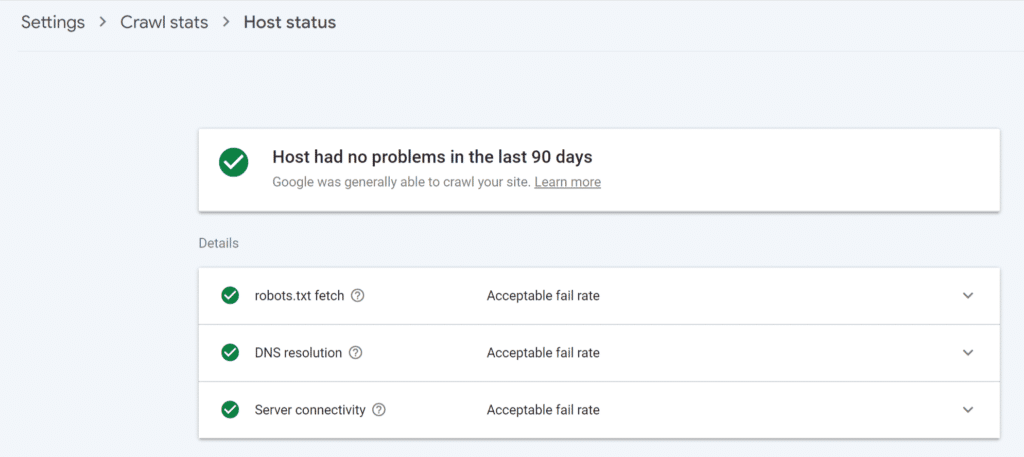

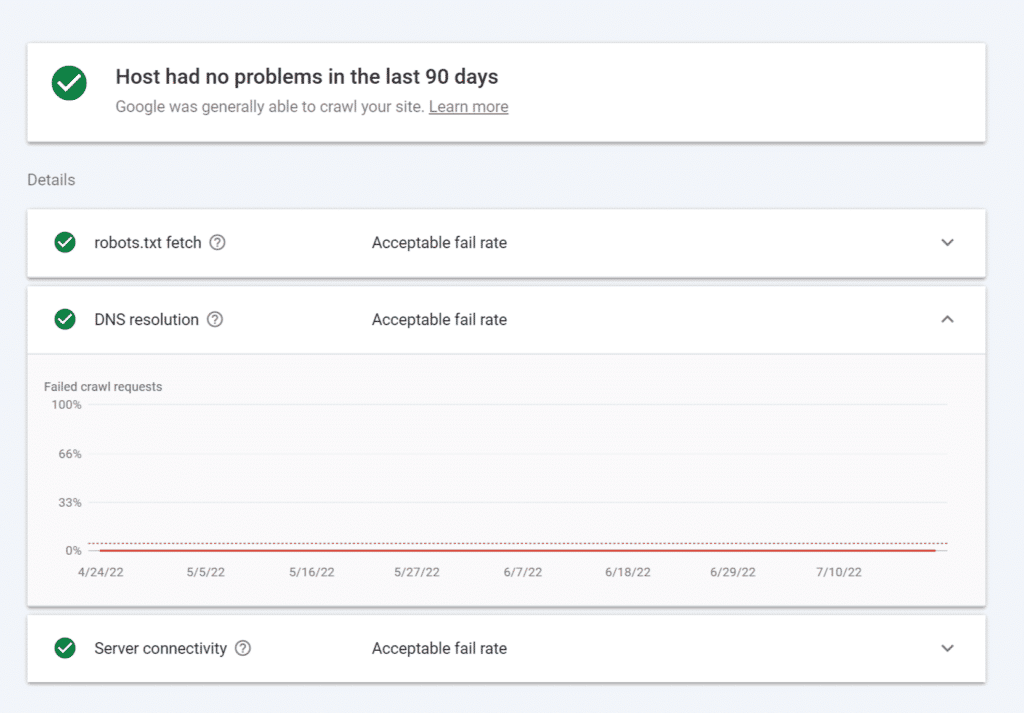

You may use host status data to determine a site’s general availability during the previous 90 days. Errors in this area indicate that Google cannot crawl your site for technical reasons.

The following categories are used to evaluate host availability. A substantial inaccuracy in any category might result in decreased availability. More information is available by clicking on a category in the report.

You’ll see a chart showing crawl statistics for the period for each category. The chart features a dotted red line; if the metric for this category was over the dotted line (for example, if DNS resolution failed for more than 5% of requests on a particular day), it is deemed a problem in that category. The status will reflect the most recent issue.

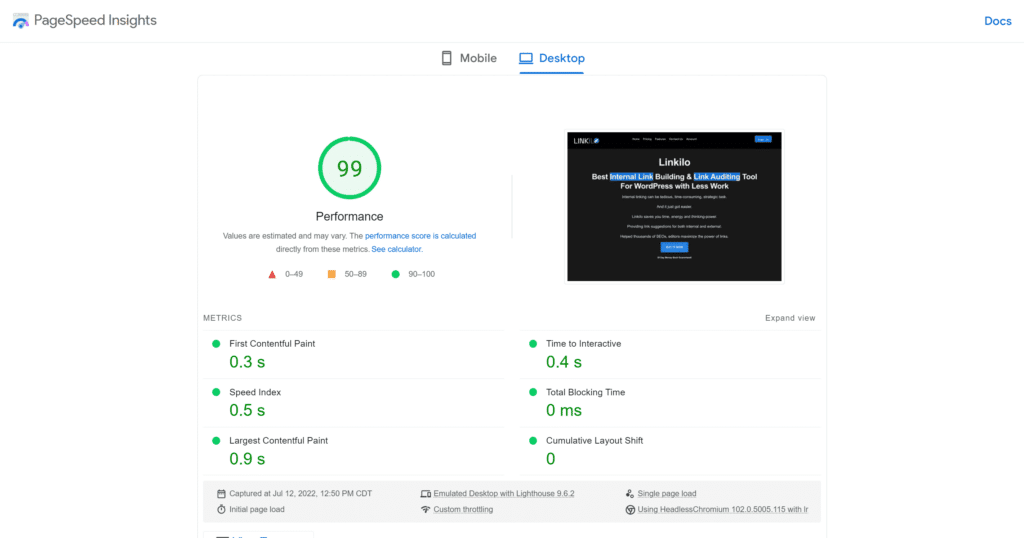

Improve the speed of your site

Google states, “Making a site faster improves the user’s experience while also increasing the crawl rate.” Hence, Googlebot may crawl more of your site’s URLs if your page performance is improved. It is because slow-loading pages consume valuable Googlebot time, whereas if your pages load rapidly, Googlebot will have more time to visit and index your site.

Block unnecessary parts of your site

Use robots.txt to prevent them from blocking unnecessary parts of your sites. eCommerce sites have a billion different ways to filter products. Every filter has the potential to generate new URLs for Google. In situations like these, you want to make sure you’re just allowing Google to spider one or two of the filters, not all of them.

How to prevent Google from crawling non-canonical URLs

Canonical tags notify Google which version of a page is the preferred, primary version. For example, a product category page for “women’s jeans” at /clothing/women/jeans allows visitors to sort by price from low to high (i.e., faceted navigation).

Because changing the order of the jeans on the page did not affect the content, you wouldn’t want both /clothing/men/jeans?sortBy=PriceLow and /clothing/men/jeans to be indexed. Here are other ways to prevent Google from crawling non-canonical URLs.

Limit redirect chains

When you 301 redirect a URL, that new URL will be seen by Google and added to the to-do list. It does not always act on it immediately; instead, it adds it to its to-do list and moves on. When you use chain redirects, such as redirecting non-www to www, then HTTP to HTTPS, everything takes longer to crawl because there are two redirects everywhere.

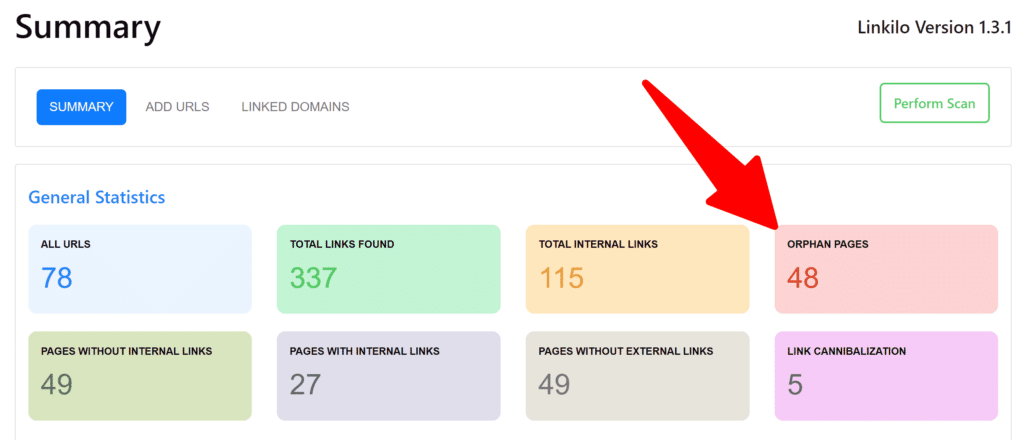

Do you have “Orphan Pages”?

Pages that have no internal or external links pointing to them are known as orphan pages. Make sure every page on your site has at least one internal or external link pointing to it because Google has difficulty locating orphan pages.

Avoid duplicate content

Make sure all your site’s pages have unique, high-quality content. Duplicate content should be avoided because it can deplete your crawl budget. This is due to Google’s desire to avoid wasting resources by indexing many pages with the same content.

Use HTML when possible.

Use HTML. Google’s crawler has gotten a lot better at crawling JavaScript and improving at crawling and indexing Flash and XML compared to other search engines.

Update Your Sitemap

For your sitemap, only canonical URLs should be used. Also, double-check that it matches the most recent version of robots.txt.

Use Hreflang tags

Crawlers use Hreflang tags to examine your localized pages. To do this, place a link rel=”alternate” hreflang=”lang code” href=”URL of page” /> in the header of your page. Where “lang code” is a language-specific code. For any given URL, you should utilize the loc> element. You can then point to the translated versions of a page.

Update old content

Google may not crawl outdated content. You might want to filter and read posts older than three months if you have a rather aggressive posting cadence (i.e., numerous daily articles). Isolating posts published before a given date is one technique to determine whether your site has stale material.

Use internal links to elevate high-performing pages for Google’s crawlers

Google’s crawlers will be more likely to spend their budget on the right pages of your website once you’ve determined which pages Google is crawling, inserted relevant robots tags, eliminated or pruned underperforming sites, and adjusted your sitemap.

Using your internal linking structure to boost those potentially high-performing sites is an advanced technical method. It is your job to concentrate on your equity wisely.

That includes allocating site equity to pages that target keywords you have a high chance of ranking and bringing you traffic from the correct clients, those who are more likely to convert and have monetary worth.

PageRank sculpting

If your website is brand new, you may get ahead by implementing PageRank sculpting or passing link equity into your site architecture and considering site equity with each new landing page you develop.

The next step in crawl budget optimization is to understand the role each link on your website plays in sending Googlebot throughout your page and dispersing your link equity. Getting your internal linking structure right will dramatically increase your money pages’ ranks.

Finally, the greatest place to invest your crawl budget is on landing pages that are most likely to generate income.

To know if your pages benefit the most from PageRank sculpting, you can:

- First, find the pages on your website that receive a lot of traffic but lack PageRank. Then, increase the number of internal links on those pages and send more PageRank. Adding them to your website’s header or footer is a quick way to accomplish this, but don’t overdo links in your navigation menu.

- You can also focus on pages with many internal links but little traffic, search impressions, or ranking for a small number of keywords. Pages that receive a lot of internal links have a lot of PageRank. They’re wasting their PageRank if they’re not using it to drive organic traffic to your site. It’s preferable to redirect PageRank to pages that can make a difference.

Use nofollow link

In general, this can be an effective strategy to limit crawl budget, or more accurately, to use crawl budget more efficiently. The page is still indexable. A better use case for nofollow would be in the listings situation.

Depending on the size of our site, we don’t want Google to be wasting its time on these particular listings. You are still losing PageRank as a result of this nofollow link. That still qualifies as a link. Therefore you’re giving up some PageRank that would have gone to that follow link otherwise.

Noindex, nofollow

The page can now be crawled in this scenario. This is a popular solution for pages like these on eCommerce sites, using noindex and nofollow. However, once Google reaches that website, it will notice that it is noindex and will crawl it much less frequently over time because crawling a noindex page is pointless.

It can’t be indexed; that’s why it’s called noindex. PageRank is not passed outwards. PageRank is still delivered to this page. However, it does not pass PageRank outwards due to the nofollow directive in the head section.

Noindex, follow

Utilizing a noindex follow as a sort of best of both approaches. This method of still passing PageRank was no longer valid and will eventually be classified as noindex and nofollow. So, once again, we have a slightly compromised answer. Google stated a few years back, “but as we crawl this page less and less over time, we will cease seeing the link, and it will kind of stop counting.

Why do search engines assign crawl budgets to websites?

Search engines require a method for prioritizing their crawling efforts. This is made easier by allocating a crawl budget to each website. This is because they are limited resources and must divide their attention among millions of websites.

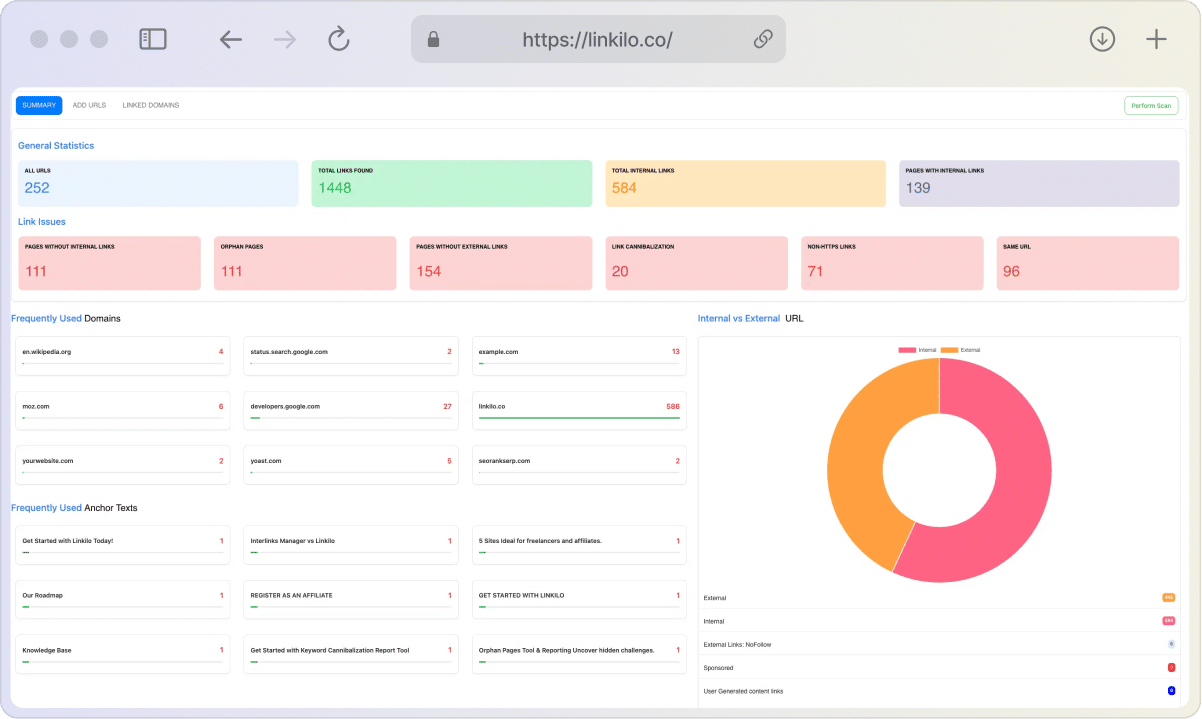

How to check crawl activity

The Crawl Stats report in Google Search Console is the ideal place to look if you want to obtain an overview of Google crawl activity and any issues they found. There are various reports here to help you identify changes in crawling behavior and issues with crawling and give you more information about how Google crawls your site.

It is also important to check the bad response codes to improve your crawling. These response codes include: robots.txt not available, unauthorized (401/407), server error (5XX), and other client error (4xx). You can also get DNS unresponsive, DNS error, fetch error, and redirect error, among others,

You can also check the timestamps of the last crawling record for the pages.

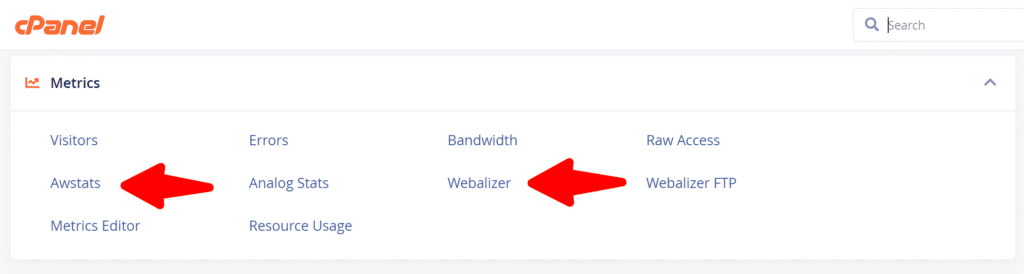

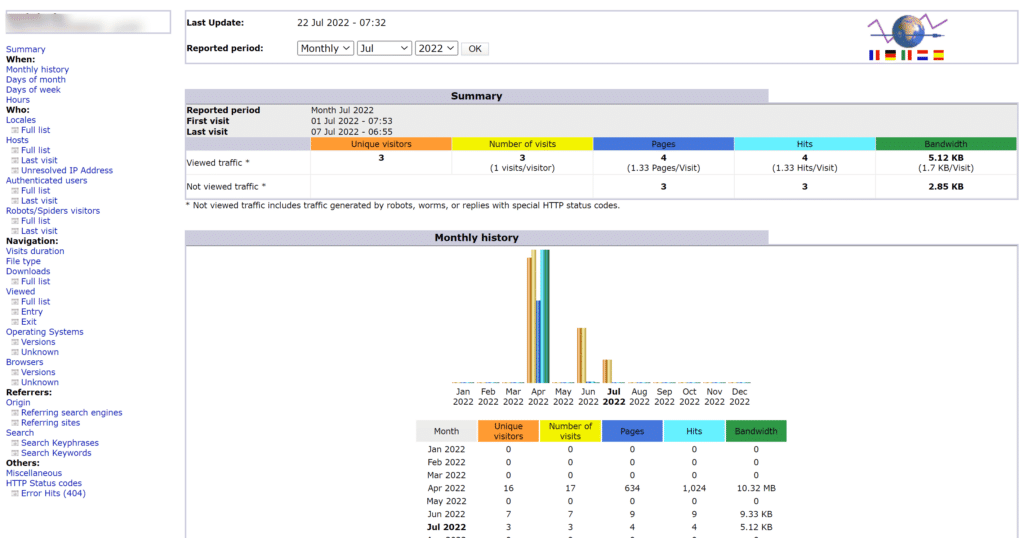

You’ll need access to your log files if you want to see hits from all bots and users. You may access tools like Awstats and Webalizer depending on your hosting and setup, as shown above on a shared host with cPanel. These tools display data from your log files that have been aggregated.

For more complex configurations, you’ll need to acquire data from raw log files and store it, maybe from many sources. For larger projects, you may need special tools like an ELK (elasticsearch, logstash, kibana) stack, which allows you to store, process, and visualize log files. Splunk, for example, is a log analysis tool.

Conclusion

Crawl budget will continue to be a crucial consideration for any SEO expert. This is because crawl budget optimization is still necessary for your website.