The Discovered – currently not indexed status implies that Google is aware of these URLs but has not yet crawled (and so indexed) them.

If you have a small website (less than 10,000 pages) with high-quality content, Google will automatically resolve this URL state.

If you run a small website and continuously get this status for new pages, AND if there is one takeaway from this issue……

Consider the quality of your content. It’s possible that Google doesn’t believe it’s worth their effort. The confusing part of this status is that content quality concerns aren’t restricted to the URLs listed; they might be a site-wide problem.

But, working on several sites of my own and many SEO projects that I have worked on, it is with absolute certainty the quality of the content is the number one reason.

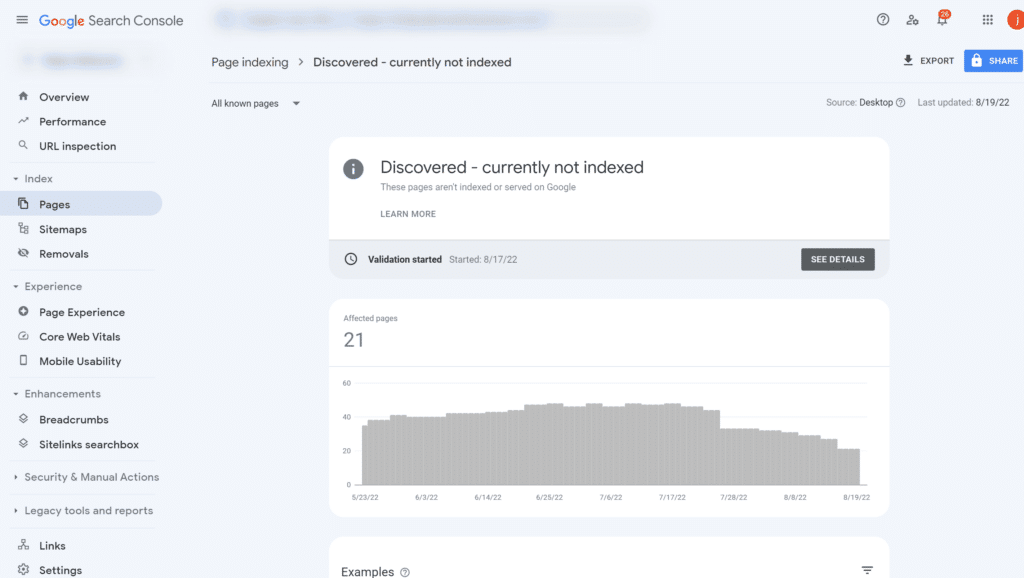

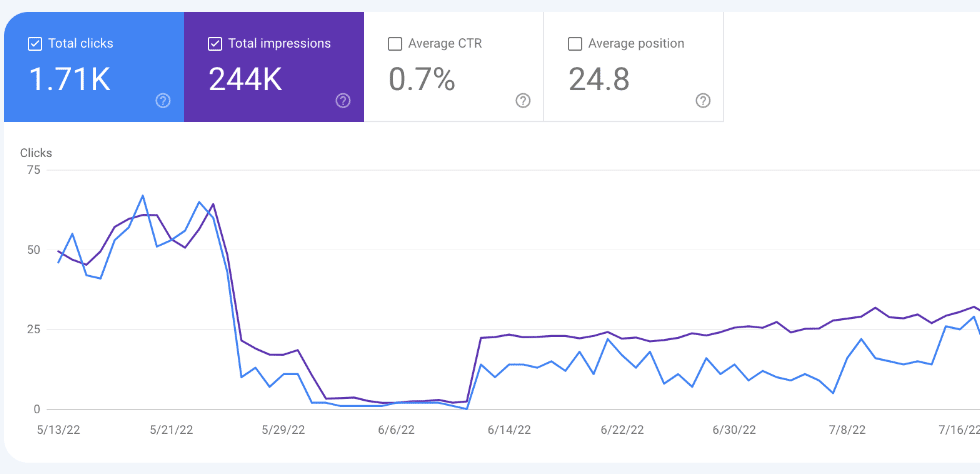

The screenshot below is one example of how I’ve updated the content quality, and you can see the gradual decrease of articles currently not indexed.

Difference between crawled not indexed and discovered

“Crawled–Currently Not Indexed” and “Discovered–Currently Not Indexed” are two different statuses that might confuse some users.

The primary difference between the two is that under “Crawled–Currently Not Indexed,” Google has previously identified and crawled the website and has decided not to index it.

For “Discovered–Currently Not Indexed,” Google discovered the page via crawling under pages but chose not to crawl it and hence not index it.

This means that sites marked “Discovered–Currently Not Indexed” are less important to Google than those marked “Crawled–Currently Not Indexed.”

Also, if your articles were in the “Discovered” section, and you’ve made some updates, they might go to the “Crawled” section before getting indexed.

Is Your Content Not Getting Indexed? We Can Help!

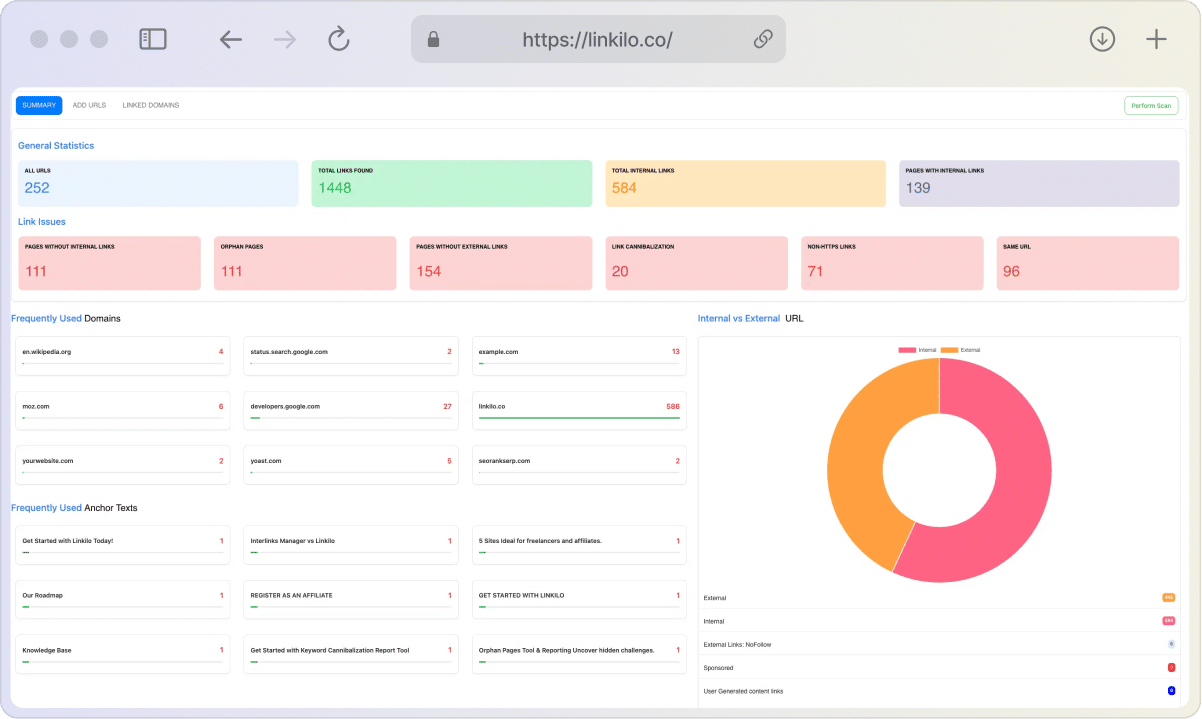

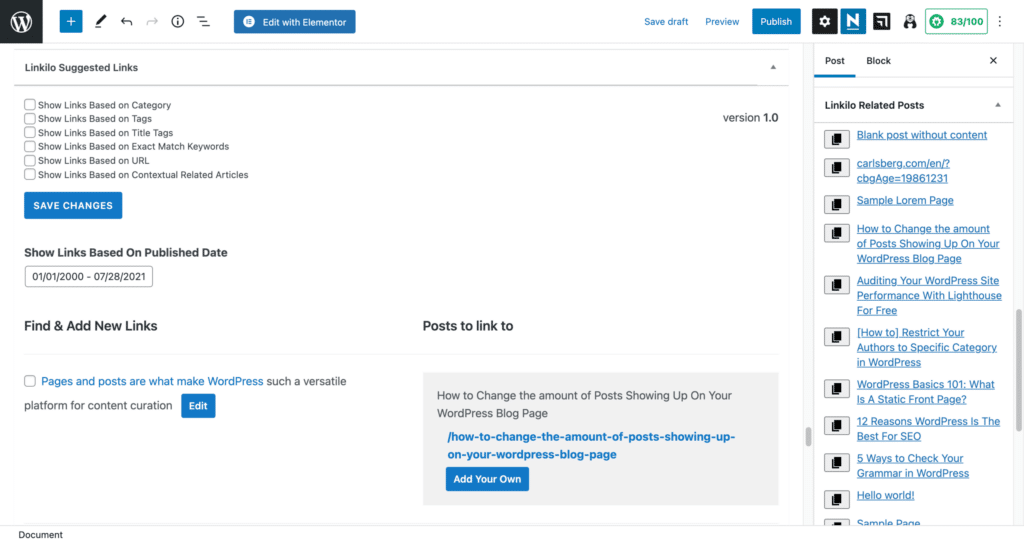

Getting your website’s content indexed by Google is crucial for visibility and organic traffic. However, issues with internal linking can hinder indexing. Linkilo’s internal linking tool for WordPress helps you identify “orphan pages” and provides link suggestions to enhance crawlability.

Improve Your Site’s Indexing Today! Don’t let indexing issues hold back your site’s potential!How long does it stay on “Discovered – Currently Not Indexed?”

What does Google have to say about this?

Mueller responds when asked how long webpages may be discovered but not indexed:

“That can be forever. So it’s something where we just don’t crawl and index all pages. And it’s completely normal for any website that we don’t have everything indexed.

And, especially with a newer website, if you have a lot of content, then I would assume it’s expected that a lot of the new content will be discovered and not indexed for a while.

And then over time, it usually shifts over, like well, it’s actually crawled, or it’s actually indexed when we see that there’s actually value in focusing more on the website itself. But it’s not guaranteed.

So from that point of view, it’s not that I would say you should just wait a little bit, and suddenly things will get better with crawling and indexing. It’s more that, like continue working on the website and making sure that our systems recognize that there’s value in crawling and indexing more and then over time we will crawl and index more.”

How long can the status ‘discovered – not currently indexed’ last?

When another viewer asked John the same question, John responded.

“That can last forever.” It’s only that we don’t crawl and index all pages. And the fact that we don’t have everything indexed is entirely standard for any website. And, if you have a lot of content on a newer website, I’d suppose it’s expected that a lot of the new stuff will be discovered but not indexed for a time.

And then it usually shifts over time, like okay, it’s actually crawled or indexed, when we find that there’s actually value in concentrating more on the website itself. However, it is not assured.”

For example, when you see the status “Discovered – currently not indexed,” this is where the URLs are in Google’s indexing process:

What causes this status?

If you’re seeing this problem on larger websites (10.000+ pages), it might be due to:

- Overloaded server: Google had trouble indexing your site since it looked overloaded. If this is the case, contact your hosting provider.

- Stuff overload: Your website includes far more content than Google is currently willing to crawl. They believe it isn’t worth their time. Filtered product category pages, auto-generated content, and user-generated content are all examples of content that fits this description. You can resolve this by pruning content and making it more unique if you want Google to crawl and index it or by removing links to it and updating your robots.txt file to prevent Google from accessing these URLs if you find Google discovering content that they shouldn’t.

- Poor internal link structure: Google isn’t finding enough paths into the content that has yet to be indexed. This can be resolved by improving the internal link structure.

- Poor quality content: Improve the quality of your content. Make content that is unique and brings value to your visitors’ lives. Satisfy their user intent. Give them what they desire. Help them in resolving issues.

Please remember that Problems 1 and 2 are classic examples of crawl budget issues. This is a concern, particularly for larger websites.

Before resolving your indexing issues

Google explicitly specifies what needs to be fixed for all URLs showing errors and helps you re-verify that issue after it has been resolved.

While you wait for Google to re-verify your site, here are three things you should do to evaluate its indexation before deciding how to fix your indexing issues—or whether you need to resolve them at all.

Analyze your affected URLs

As John Mueller mentioned, it is normal for Google not to crawl parts of your web pages since its search engine results page algorithm only provides the most relevant results (SERP).

As a result, unless it affects the most important pages to your business, such as a sign-up landing page aimed at converting clients, the currently not indexed status does not necessitate quick action.

You can export the list of affected URLs from GSC and prioritize the ones you want Google to index to decide what action to take. By classifying your affected URLs, you can also discover patterns of where the indexing problem occurs on your site and what type of webpages—blog URLs, for example—tend not to be indexed.

Validate if the indexing error is valid

Following that, you should constantly do an index status check on your URLs because URLs identified by Google as excluded might often be found in its index after all.

Note: Pages with the excluded status are either duplicates of indexed pages or are restricted from indexing by some mechanism on your site.

You can use Google’s site search operator to see which of your URLs appear in its SERPs and then filter them based on whether they have been indexed.

Conduct live URL tests

You should also do a live URL test in Google’s URL inspection tool to ensure that Google indexes your pages without any crawling issues the next time it crawls them.

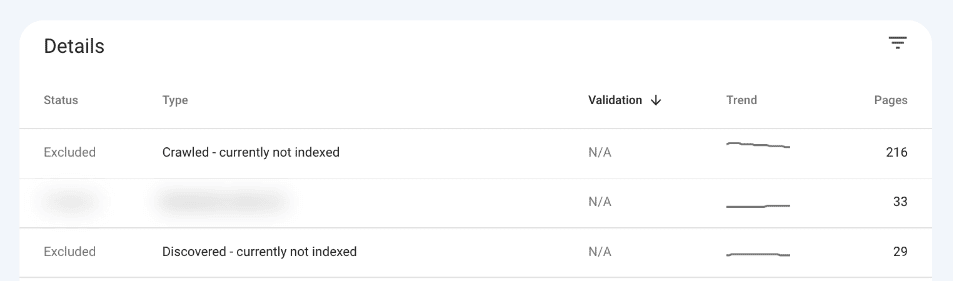

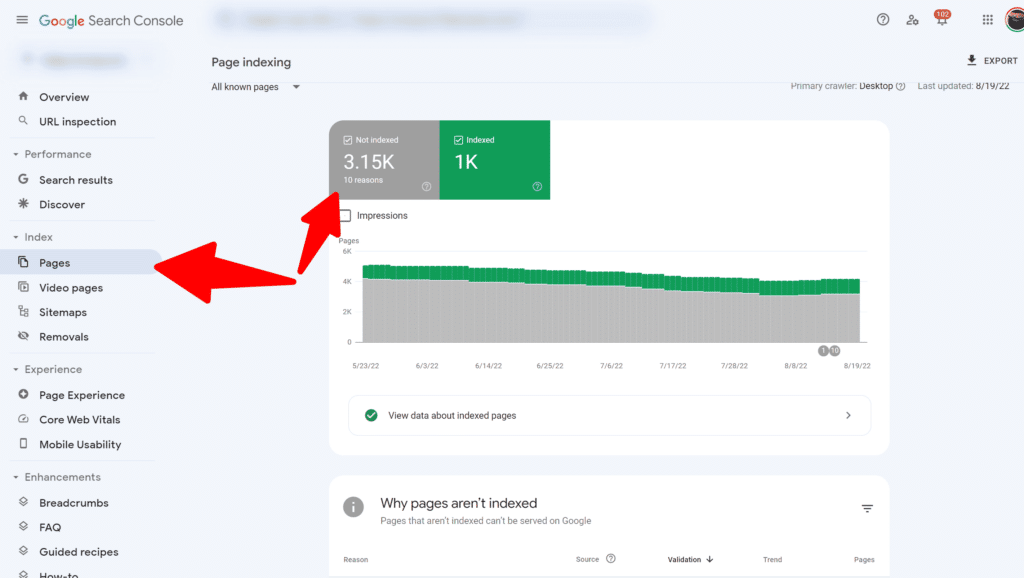

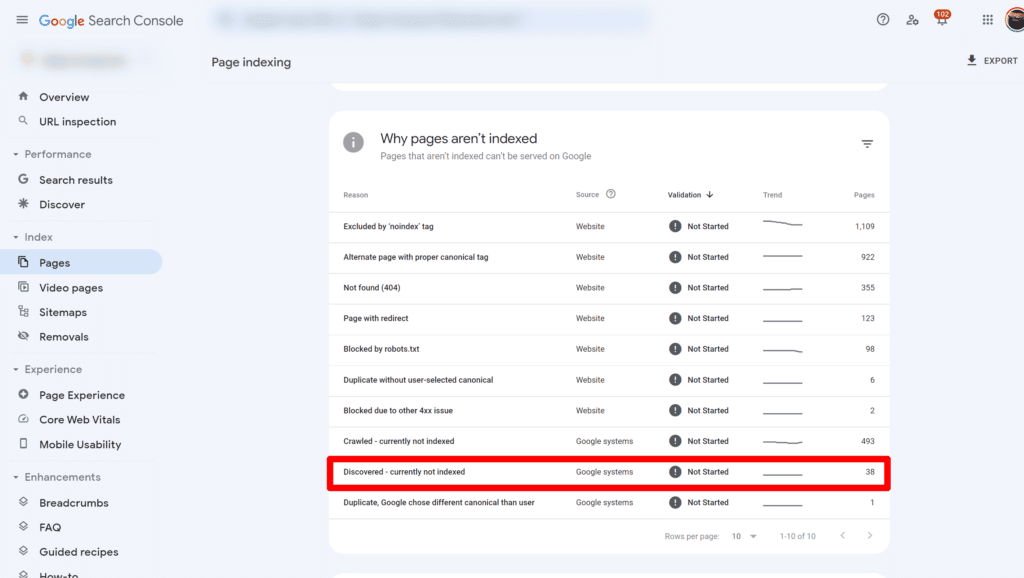

Where to find the discovered–currently not indexed status

To find Discovered–currently not indexed on Google Search Console:

Click on “Pages” in the “Index” section and click on “Not Indexed”:

The crawling and indexing statuses of your website’s pages are displayed in the report.

Discovered – currently not indexed occurs under the Excluded category, which includes URLs that Google hasn’t indexed but aren’t the result of an error, according to Google.

When using Google Search Console, you can see a list of affected URLs by clicking on the type of issue.

You may discover that you meant to keep some of the reported URLs from the index, which is acceptable. First, however, you should keep an eye on your valuable pages – if any of them haven’t been indexed, see what problems Google has found.

When does the “Discovered–currently not indexed”section requires action?

URLs in Discovered–currently not indexed, may not necessarily require adjustments to your website.

Specifically, you do not need to take any action if

- The number of affected URLs is small and constant over time, or

- The report includes URLs that should not be crawled or indexed, such as those with canonical or ‘noindex’ tags or those that have crawling blocked in your robots.txt file.

However, it is still important to control this section of this report.

If the number of URLs increases, or if they contain value URLs that you intend to rank and provide considerable organic traffic, you should pay attention to them.

Your site might change dramatically during one of Google’s Core Update. Here is a site that started to gain organic traffic and was smashed by the May Core Update, and most of these URLs got de-indexed and into the “Discovered-Currently Not Indexed.”

Troubleshooting the “discovered–currently not indexed” status

There are various reasons why you may be getting the “Discovered–currently not indexed status” on the Google search panel. However, the following are some of the more common ones that we see:

Your website is new

If your website is somewhat new, some pages may be labeled “Discovered–currently not indexed.” This is because it can take Google some time to identify and index all of the pages on your website.

Meanwhile, you can employ other strategies to increase your sites’ likelihood of appearing in Google searches.

Crawl problem

The first possible problem is your robots.txt file.

Robots.txt files are an excellent approach to govern the content and structure of your website. This file instructs Google about which pages on your website it should and should not crawl. However, if you do not use robots.txt appropriately, you could prevent Google from indexing your page.

Google will not crawl URLs that include a robots.txt file that restricts which pages can be crawled. This might make it difficult for visitors and Googlebot to find certain pages on your website.

Problems with content quality

If you produce a large content volume, the quality may not be where it should be. The most common reasons for content quality issues, in our experience, are that websites produce content that falls into one of the following categories:

- Short content (less than 300 words)

- Poorly written content — information that adds no value

- Inadequate copy – does this information provide value to your website?

- Local SEO campaigns include a large number of similar location pages

- Content is too similar to other web pages

Before posting content, be sure it is worthy of being indexed by Google. Google is significantly more likely to publish new, unique content of real value to search users.

No internal links

Your internal linking structure is important in communicating page value to Google. For example, when you publish new content, you should update any relevant pages on your website with links to the new content. By including internal links, Google will be able to understand the context of new content better and be more likely to index it.

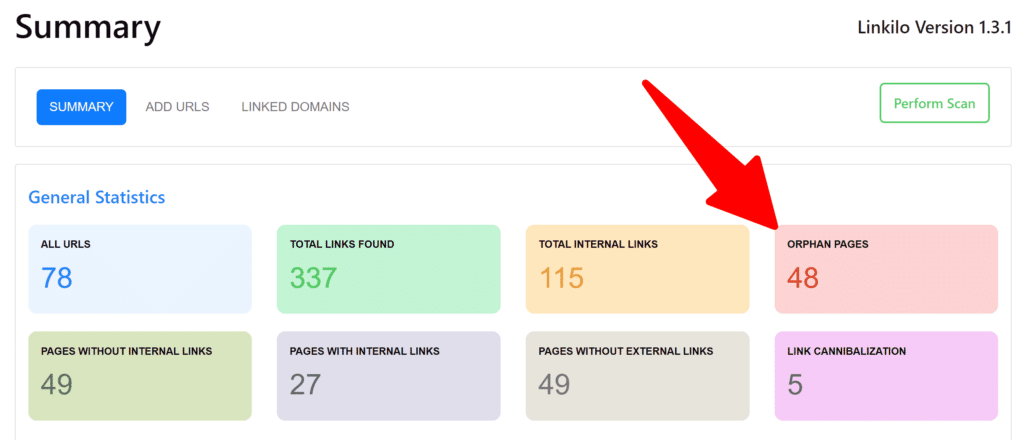

If you are using our internal linking tool for WordPress, you can quickly identify “orphan pages”:

Save your time to identify any issues on your site quickly, and it can also help you identify link suggestions:

Get your site indexed faster by providing better crawlability for search engines. 30-day money-back guarantee. START TODAY.

Sitemap exclusion

If you’re using a static XML sitemap, the page was probably not added to the XML sitemap when it was created. Instead, we recommend using a dynamic sitemap that automatically includes new URLs as they are created.

If the URL is not included in your XML sitemap, Google is likely to consider it less significant in the structure of your website, and it may be excluded from indexing.

Duplicate content

Is this content plagiarized from another site? In some circumstances, you may be using supplier content or specifications that repeat huge copy blocks (especially e-commerce). While Google is hazy about how duplicate content affects your results, creating original and useful content is important.

Page-level noindex meta tag

A noindex meta tag at the page level was used incorrectly, or an XML sitemap was not produced. You can test this by entering the URL into Google Search Console and running a live test.

Mistakes in implementing redirects

Implementing redirects can help your site, but only if done correctly. For example, when Googlebot sees a redirected URL, it must submit an additional request to reach the destination URL, which necessitates using more resources.

Make sure you follow recommended practices while implementing redirects. For example, you can reroute both humans and bots from 404 error pages linked from external sources to functional pages, allowing you to keep ranking signals.

Ensure not to link to redirected pages; instead, update them to point to the right ones. You should also avoid redirect loops and chains.

Server issues

Because your site seems to be overcrowded, Google could experience crawling issues. This happens because the crawl rate, which affects the crawl budget, is tailored to your server’s capabilities.

One thing I usually see is that servers give intermittent errors – specifically, 500-something – and anything that your server responds to with a 500, 501, 502, 504, whatever, means your server is saying, ‘Hold on, I have a problem here […], and it could fall over at any moment, so we are backing off.

When we back off and your server responds well, we usually slowly ramp up again. Imagine receiving 500-something responses every day.

We’re seeing it. We’re backing off a bit. We’re ramping back up and seeing it again […]. So if your server responds negatively, you should investigate.

If your site is experiencing server problems, contact your hosting provider.

Poor web performance can also cause server problems.

Heavy websites

Some excessively heavy pages can cause crawling issues. For example, Google might lack the resources to crawl and render them.

Googlebot must fetch every resource to render your website counts against your crawl budget. In this situation, Google sees the page but moves it up the priority list.

To lessen the negative impact of your code, optimize your site’s JavaScript and CSS files.

Perform content pruning

Due to content overload, certain URLs may fall into the Discover – currently not indexed category while launching a new website or revamping an existing one. This signifies that your website has far more content than Google is prepared to devote more to crawling.

Content overload can arise when a site mistakenly auto-generates too many URLs or has too many indexed pages with little SEO value, such as tags and category pages.

Here are some solutions to content overload:

- Use canonical tags to converge ranking signals and notify Google which URL is a page’s master copy.

- To prevent Google from scanning low-priority pages you don’t want to rank for, update your robots.txt file.

- Consolidate and reuse short articles with similar themes and content into long-form content.

- Remove underperforming content that is no longer relevant to your brand.

What does John Mueller suggest?

During SEO Office Hours, John Mueller was asked how to fix the problem of 99 percent of URLs on a website being trapped in the Discovered – currently not indexed report part.

John’s recommendations centered on three major steps:

I’d first check to see if you’re not mistakenly generating URLs with different URL patterns… things like the parameters in your URL, upper and lower case, all of these things can lead to essentially duplicate content. And if we’ve found a number of these duplicate URLs, we can conclude that we don’t need to crawl all of them since we already have some variation of this page there

Next, ascertain that everything is in order in terms of internal linking so that we can crawl through your website’s pages and make it to the end. Using a crawler tool, such as Screaming Frog or Deep Crawl, you can test this… They will simply notify you if they can crawl your website and show the URLs found during that crawl.

If the crawling is successful, I will prioritize the quality of these pages. If you have 20 million pages and 99 percent of them are not indexed, we only index a very small part of your website… Perhaps it makes sense to say, ‘Well, what if I cut the number of pages in half, or even… to 10% of the existing count?’…

More comprehensive content on these pages can improve the overall quality of the content there. And it’s a bit easier for our algorithms to look at these pages and say, ‘Well, these pages… seem very good.’ We should go crawl and index a lot more.’

source: John Mueller

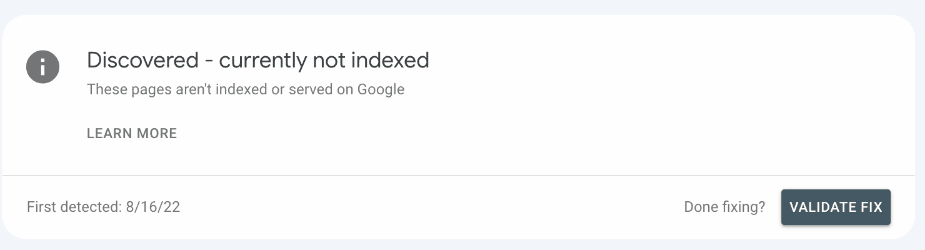

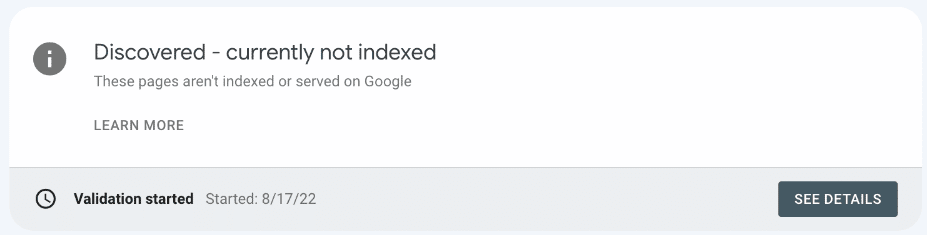

Validate Fix your Discovered – currently not indexed

After you’ve done everything you can to updating your content quality, you can now hit the “Validate Fix” and Google will “eventually” get around to validating those fixes/

After you click on “Validate Fix”, you will see the button changed to “See Details”:

Conclusion

Page quality and crawl budget concerns are common causes discovered–currently not indexed.

To resolve these issues and help Google crawl your sites faster and more accurately in the future, you may need to improve various parts of your pages.

There are usually no quick fixes for getting the pages indexed; however, looking at the SEO aspects, I mentioned before can help you have a higher chance of getting them indexed.

It’s evident that Google wants to ensure that it only indexes high-quality content, and these requirements appear to be increasing. This is especially tasking for new websites, repeatedly proving to Google that their content is worthy of inclusion in the index.

Take Action Now and Get Your Content Indexed Faster!

Want to ensure your valuable content gets indexed by search engines? Linkilo’s powerful tool helps you optimize your internal linking structure, making it easier for search engines to crawl and index your pages. Say goodbye to “Discovered – Currently Not Indexed” status!

Boost Your SEO with Linkilo! Experience the benefits of a well-indexed website!